Table of Contents

- Summary

- Hadoop in the enterprise

- Remember the mainframe?

- Mainframe, meet Hadoop

- Hadoop, meet mainframe

- Ops, meet the data scientist

- Conclusion

- Key takeaways

- About Paul Miller

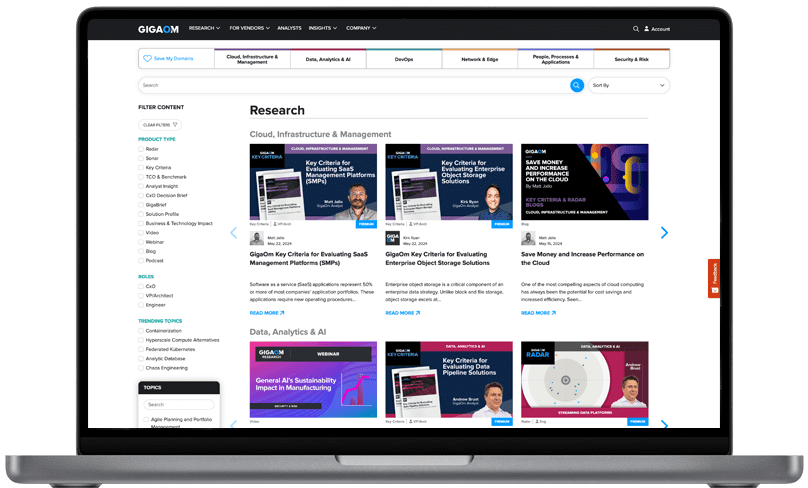

- About GigaOm

- Copyright

1. Summary

According to market leader IBM, there is still plenty of work for mainframe computers to do. Indeed, the company frequently cites figures indicating that 60 percent or more of global enterprise transactions are currently undertaken on mainframes built by IBM and remaining competitors such as Bull, Fujitsu, Hitachi, and Unisys. The figures suggest that a wealth of data is stored and processed on these machines, but as businesses around the world increasingly turn to clusters of commodity servers running Hadoop to analyze the bulk of their data, the cost and time typically involved in extracting data from mainframe-based applications becomes a cause for concern.

By finding more-effective ways to bring mainframe-hosted data and Hadoop-powered analysis closer together, the mainframe-using enterprise stands to benefit from both its existing investment in mainframe infrastructure and the speed and cost-effectiveness of modern data analytics, without necessarily resorting to relatively slow and resource-expensive extract transform load (ETL) processes to endlessly move data back and forth between discrete systems.

Key findings include:

- Mainframes still account for 60 percent or more of global enterprise transactions.

- Traditional ETL processes can make it slow and expensive to move mainframe data into the commodity Hadoop clusters where enterprise data analytics processes are increasingly being run.

- In some cases, it may prove cost-effective to run specific Hadoop jobs on the mainframe itself.

- In other cases, advances in Hadoop’s stream-processing capabilities can offer a more cost-effective way to push mainframe data to a commodity Hadoop cluster than traditional ETL.

- The skills, outlook and attitudes of typical mainframe system administrators and typical data scientists are quite different, creating challenges for organizations wishing to encourage closer cooperation between the two groups.

Feature image courtesy Flickr user Steve Jurvetson