Table of Contents

- Summary

- What is Hadoop?

- Where does Hadoop make sense?

- What are the barriers to adoption in the enterprise?

- What are the risks of adoption in the enterprise?

- Mitigating risk by retaining focus

- Building on success

- Key takeaways

- About Paul Miller

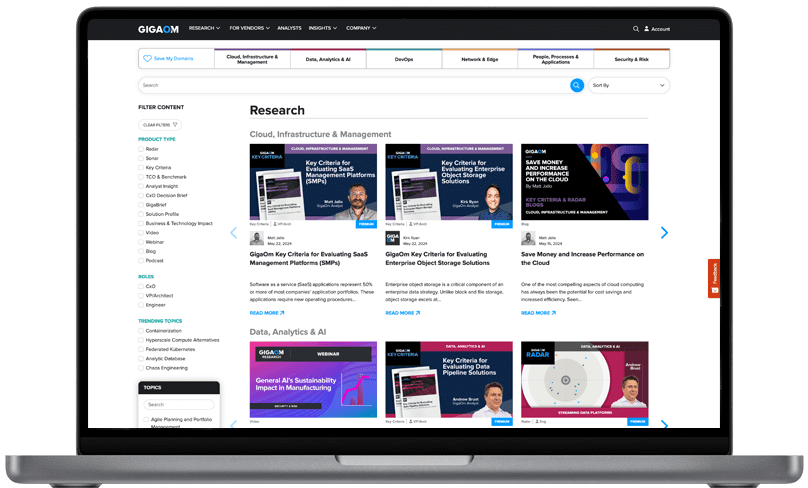

- About GigaOm

- Copyright

1. Summary

Hadoop-based solutions are increasingly encroaching on the traditional systems that still dominate the enterprise-IT landscape. While Hadoop has proved its worth, neither its wholesale replacement of existing systems nor the expensive and unconstrained build-out of a parallel and entirely separate IT stack make good sense for most businesses. Instead, Hadoop should normally be deployed alongside existing IT and within existing processes, workflows, and governance structures. Rather than initially embarking on a completely new project in which return on investment may prove difficult to quantify, there is value in identifying existing IT tasks that Hadoop may demonstrably perform better than the existing tools. ELT offload from the traditional enterprise data warehouse (EDW) represents one clear use case in which Hadoop typically delivers quick and measurable value, familiarizing enterprise-IT staff with the tools and their capabilities, persuading management of their demonstrable value, and laying the groundwork for more-ambitious projects to follow. This paper explores the pragmatic steps to be taken in introducing Hadoop into a traditional enterprise-IT environment, considers the best use cases for early experimentation and adoption, and discusses the ways Hadoop can then move toward mainstream deployments as part of a sustainable enterprise-IT stack. Key findings include:

- Hadoop is an extremely capable and highly flexible tool. Early in an implementation, the combination of that flexibility with a poorly scoped project creates a real risk of scope creep and project bloat, decreasing the probability of success.

- It makes sense to apply Hadoop to well-understood problems as a pilot, creating opportunities to measure return on investment while allowing all concerned parties to concentrate on learning the platform.

- Hadoop can be an efficient and cost-effective tool for offloading some of the data processing currently done inside EDW, freeing up capacity and creating the sort of measurable challenge early adopters of Hadoop need.

- Hadoop augments — but does not replace — the EDW.